The last module in this course is about machine learning and gestures. We will use a phone to record movement and try to teach the machine to recognize what we do.

An interesting part of ML is the difference from "normal programming". Where traditional programming is logical and has if/else statements, modern AI has more of a fuzzy logic, making judgments and discriminations based on earlier experience. This can make programming easier but can also lead to very unpredictable results where the logic becomes more of a black box that is hard to understand.

When the logic comes from training there is a risk of unknown bias to creep in. When we define a human in a program we might think of things to identify them by, like legs arms and such. There is already a risk here, where we have to account for people without arms and legs and so forth. With ML this is even harder as we might forget to teach it a lot of stuff. This was the case when google launched filters for Hangouts, they taught the system on google engineer as these were easy to come by humans. This meant that it did not get trained on black people, as Silicon Valley is very white.

Another thing to think about is what a gesture really is. A swipe on a phone is really easy to identify as a human, but if you try to describe it it gets harder. How long is it? How fast? It's interesting how all the simple things become complex when you really look at them.

Assignment: Machine Learning

Brief: Explore and design movement, gestures and bodily interactions with sensors and ML

Materials: TensorFlow and sensors in a smartphone

Team: Lin and me

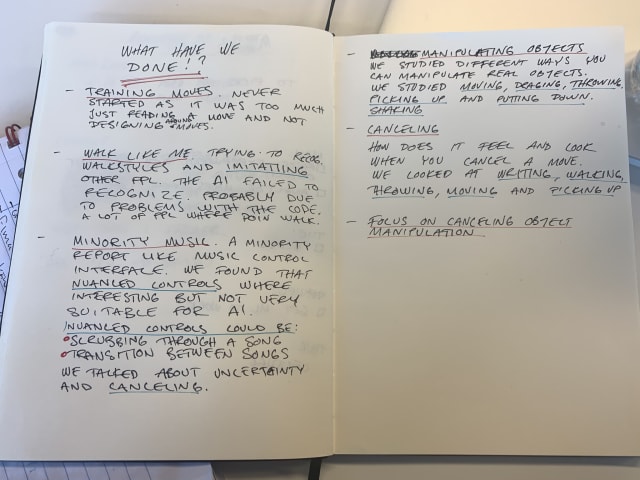

We started by toying around with the code Jens gave us. It's an extension of the Node JSON bridge by Clint that we used in Programming 2. We started by trying to record some easy gestures like circles and lines. It worked ok, but the length of th moves have to be the same and that might not be so good.

We started thinking of some movements to analyze and as we both like working out we started to think in those terms. Maybe trying to see if you make your reps the right way or counting reps. When we talked to Jens he didn't like the idea. He wanted us to go deeper, analyze what the movements really are. The texts talk about this too, how the moves can be viewed in different ways.

When designing movement The Mover is the first person perspective, an important experience as this is what the "end user" will experience. If the move feels weird it should probably be designed in another way. This is something that is often forgotten when designing for example mobile apps, the hamburger menu in the top left corner is a terrible position for the user but it looks good when designing the app on a large screen.

The Observer is the view another person would have, this can be important to see the social implications of a move. A silly example could be when children spin around, they enjoy the movement but adults see all the dangers to the room and china.

The final perspective is The Machine is a bit different than the observer as it has no understanding for cultural context. It can only see what we have given it sensors to see and many kinaesthetics known to the mover are lost. The machine can not see how hard you push and we have to account for this when we want the machine to understand the movement.

In the end we want to find a mapping between what the mover feels and what the machine senses.

To dig deeper we started investigating walks. We can identify people we know by their walk far away and yet it is hard to explain what it is about it that is special.

We tried to record ourselves walking back and forth and train the machine to recognize us. In the end we wanted to be able to copy each others walking styles with the help of the machine. To get a sense of how it is to walk like another person. We failed miserably. It could never identify our walking style and always thought it was Lin.