Designing with texture

Introduction

Interaction designers are increasingly tasked with crafting nuanced digital feedback to inform and delight the user. However, there is a lack of frameworks for designing continuous feedback that could help. Heyer (2018) proposes a set of lenses for analyzing interactive objects in terms of how they afford manipulation in different contexts and why this manipulation is integral for skillful coping. The part of Heyer's reasoning that I will focus on in this essay is feedback and feedforward as texture. According to Heyer, texture is always there, it is part of the material, it is invisible but always available: the noise of a car engine, the weight of a coffee thermos, the sound and vibrations when a bike's tyres roll on the road. These textures reveal something about the artifact’s state and are a natural part of any mechanical machine or tool. Digital artefacts, on the other hand, do not have intrinsic textural feedback and designers need to design the feedback in order to facilitate coping. The current paradigm in interaction design is not concerned with vague textural qualities: it is more interested in the precise nature of numbers and meters.

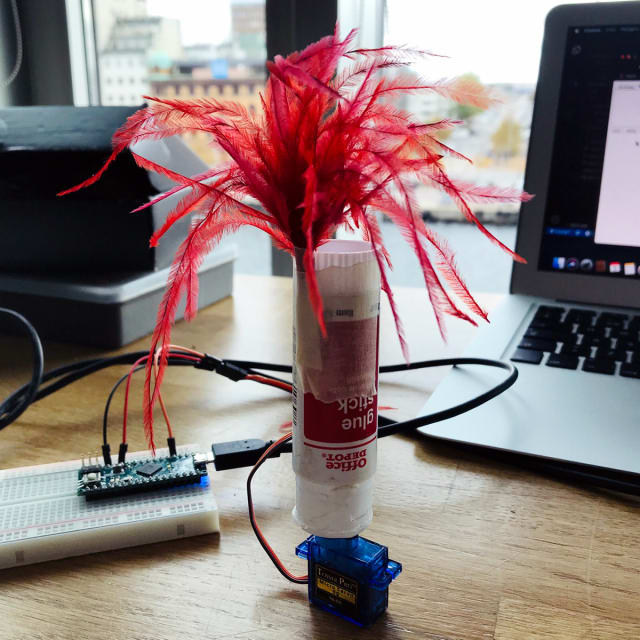

In the Interactivity course me and my peer were tasked with designing for coping with servos. We worked with a beat, or rhythm, as feedback. While we did not arrive at a concrete application of the interaction designed, I believe the reflections provoked by this exploration highlight aspects of Heyer’s theory that I might have otherwise overlooked. Based on them, I will discuss the potential for nuanced textural feedback, how it can benefit skillful coping, and what issues might arise when designing with textures.

Textural feedback

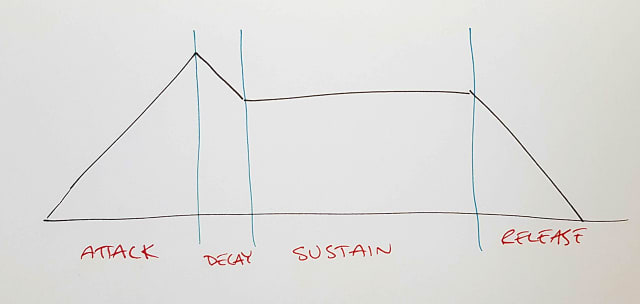

Textural feedback is when an artefact informs us of its state with intrinsic features. Feedback, like the resistance when driving in a screw, the roar of the engine in a car, or the sound of the plank when the saw is cutting through wood. When you drive, you know when to shift gear without looking at the tachometer. When tightening a screw, if you are skilled enough, you know when it is tight: you do not risk over tightening. You can hear when you are nearing the end of the plank and can adjust the force and speed to get a clean cut. Physical artefacts have this feedback and feedforward mechanism built in and we as users use them to cope with the tasks at hand. Digital artefacts typically have a more binary type of feedback. Notifications and status LEDs have their role, but in the flow that is coping, they interrupt the user and enforce an unnatural cognitive evaluation of the situation. Textures in digital artifacts could keep users in their flow of actions and let them cope without thinking. However, to build texture designers have to explore all the ways they can unobtrusively give users ongoing feedback. Some examples of this are shape changing objects, color changing surfaces, vibrations, sound, or any other continuous signals. There is not a lot done in this field and it is open for design exploration.

Interactive artefacts, as we design them, are digital in nature as that is our material. As digital artefacts they are designed from the ground up and seldomly have a lot of feedback that is inherent to their construction, at least not on purpose. We have to design the functions as well as the feedback, feedforward and affordances. When doing so we tend to like to design clear and unambiguous so that the artefact is easy to learn and use. This line of thinking goes against much of older design of machines, where the capabilities were more important than ease of use, and having to learn the trade was a given.

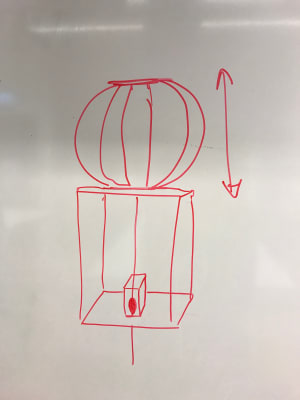

Textural feedback can also give us glanceable information that could help us cope. A hard drive that inflates to show you how full it is would let you know without having to continuously check when we have to delete files to free up space. That kind of information would make coping easier as well as introduce social manipulability into the artefact. It would signal an ability to keep your workspace clean or to show off how much work you have done.

Skillful coping

Heyer (2018) builds upon earlier work on skillful coping and argues that nuance in feedback is the key to skillful coping and the development of expertise. An artefact can have different affordances to different people depending on their skill level and previous experiences. Michael Polanyi's (2009) notion of tacit knowledge also lends credibility to this theory. Polanyi proposes that we have tacit skills and knowledge difficult to express and relate. These skills can be, for example, craft-related. Here the practitioners simply know the right amount of force they need to apply to cut into their material. Polanyi suggests they could never express this knowledge or explain it without using the tool at hand.This rich feedback is unusual in digital artefacts but we can see it in some specialist tools, such as, for instance, Wacom drawing tablets. Here the pressure of the pen against the tablet lets a skilled artist draw with fluid line widths and emphasize with the force of her stroke. More advanced pens also take distance and angle into account. Wacom's tablets are a great example of where rich feedback affords skilled coping for the experienced artist but they also show that a novice user will not have as many affordances as an expert before they learn how to control the pressure of the pen, this is something to keep in mind when designing the software to be used with the pen.

What opportunities lie in textural feedback

Nowadays feedback is often expressed in absolute numbers, such as blinking light or text. It is good to know the percentage of battery left, but what decisions can a user make if they do not know how fast the battery is draining? They have to hover the power icon on their smartphone every time they want to know more. As designers, we could embrace this and design for less concrete feedback. We could create more nuance, richness and texture.

What is missing is the sensed, felt, or tacit knowledge. When you pick up a spray can, you feel how full or empty it is: you do not have to check a status meter to see that it is almost empty. We can design for this in digital artefacts too. However, it requires us to think differently.

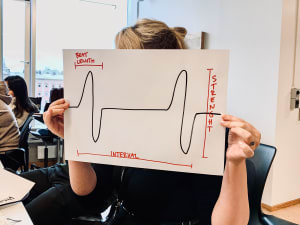

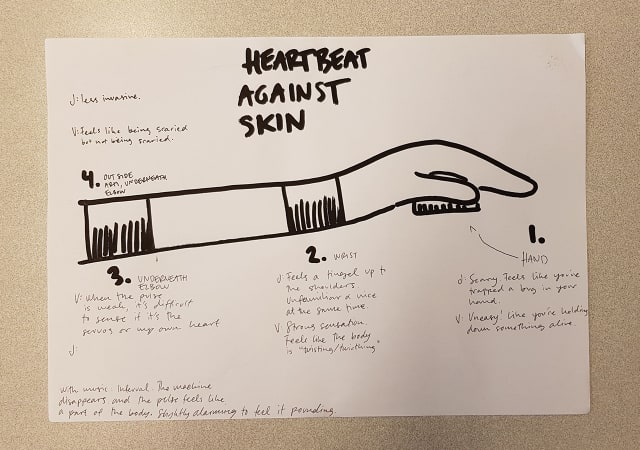

One of the prototypes me and my peer created was a servo that one strapped onto their wrist, giving them a "heart beat" feedback that we could adjust in several dimensions. When trying out different rhythms and beats the user could get an unobtrusive yet quite rich feedback. They would experience a richness in the different rhythm, strength and speed that would make their "heart beat". There was a weird eerie feeling when experiencing the "heart beat" of the prototype ourselves. It somehow felt like our own heart beat. The sensation was similar to high intensity training, as the heart beat cooled down at times.

An industry that could easily incorporate more textural and rich feedback is the gaming industry. Game consoles often already use haptic feedback but it is limited to occasional short bursts similar to notification vibration on smartphones, using textured feedback could give more nuance and depth. Players are used to having to learn games and their user interfaces, as they often differ quite a lot. This makes them more appreciative of novel forms of feedback. Competitive online games also have a high level of skill development that would facilitate textural feedback. With the advanced haptic feedback of the current generation of consoles, the heart beat we designed could easily be used in a game pad to communicate character health or similar status. It would just disappear in the background but always be noticeable, especially when it changes or reaches higher intensities.

Limitations of textural feedback

When designing texture, designers explicitly design for a learning curve. This makes the divide between a novice user and an expert even wider. Making digital artefacts approachable yet enabling rapid skill development at the same time is a big challenge. I believe this might be done, for example, with multiple layers of feedback like in the car, where the tachometer shows the engine revolution at the same time as you feel and hear it. However, it remains a challenge to tackle.

We also have to take into account what this feedback does to the user. The "heat beat" felt very real and had a hard coupling to the real world feature it was mimicking. It would be hard to use it for something that is not associated with pulse without changing it significantly. Holding your hand on top of a device that moves with a "heart beat" was very discomforting, it felt like smothering a small living thing. I struggle to see where that could be used outside of very niche experiences. Textural feedback is specific to the experience designed for, it can not always be replicated or abstracted through patterns or rules.

Conclusion

Textural nuanced feedback could be instrumental in developing new artefacts that allow for skillful coping. It could help create products that are easier and more pleasurable to use in the long run. Despite its potential textural nuanced feedback remains largely unexplored in interaction design. As I have discussed in this essay, there is great potential in the concept. However, considering the steep learning curve and the specificity of feedback patterns, it might be hard to find opportunities for implementation. If we design with this in mind, as more devices implement textural nuanced feedback we might be able to shape new user interface patterns that will become recognizable and familiar.

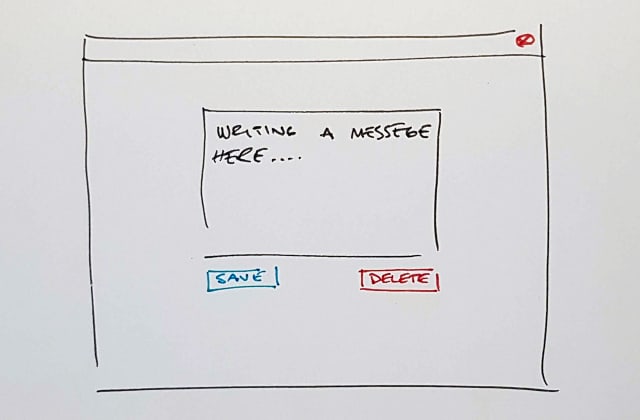

In this essay, I have focused only on physical feedback. Nevertheless, I see great potential in using textural feedback in purely digital artefacts, such as mobile and web applications. Here it might be designed as a background function, running continuously throughout the user’s interaction with the application, or similar. This is a subject I want to explore more as a web developer.

References

Heyer, C. (2018). Designing for Coping. Interacting with Computers, 30(6), 492-506. https://doi.org/10.1093/iwc/iwy025

Polanyi, M., & Sen, A. (2009). The tacit dimension. University of Chicago press.